In a recent conversation about AI’s impact on work, Dr. Waku and Scripter explored a future where AI surpasses humans in most economically valuable tasks. They suggested AI cybersecurity as one of the few remaining careers, because systems too critical to automate entirely will need humans “to kick the ass” when things go wrong.

Their discussion connects to themes I explored in my previous post on human-centric AI but overlooks something more fundamental: the perpetual challenge of aligning AI with human values.

The Problem AI Can Never Solve

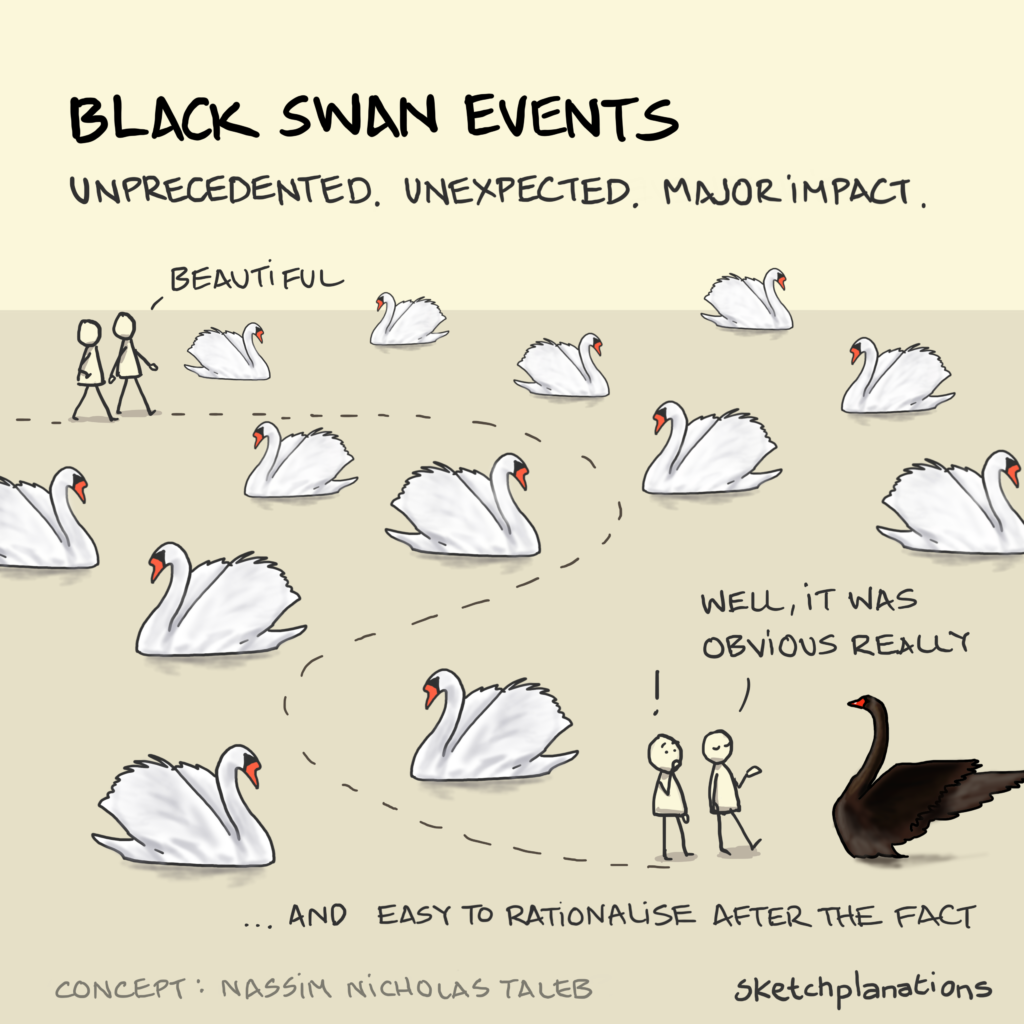

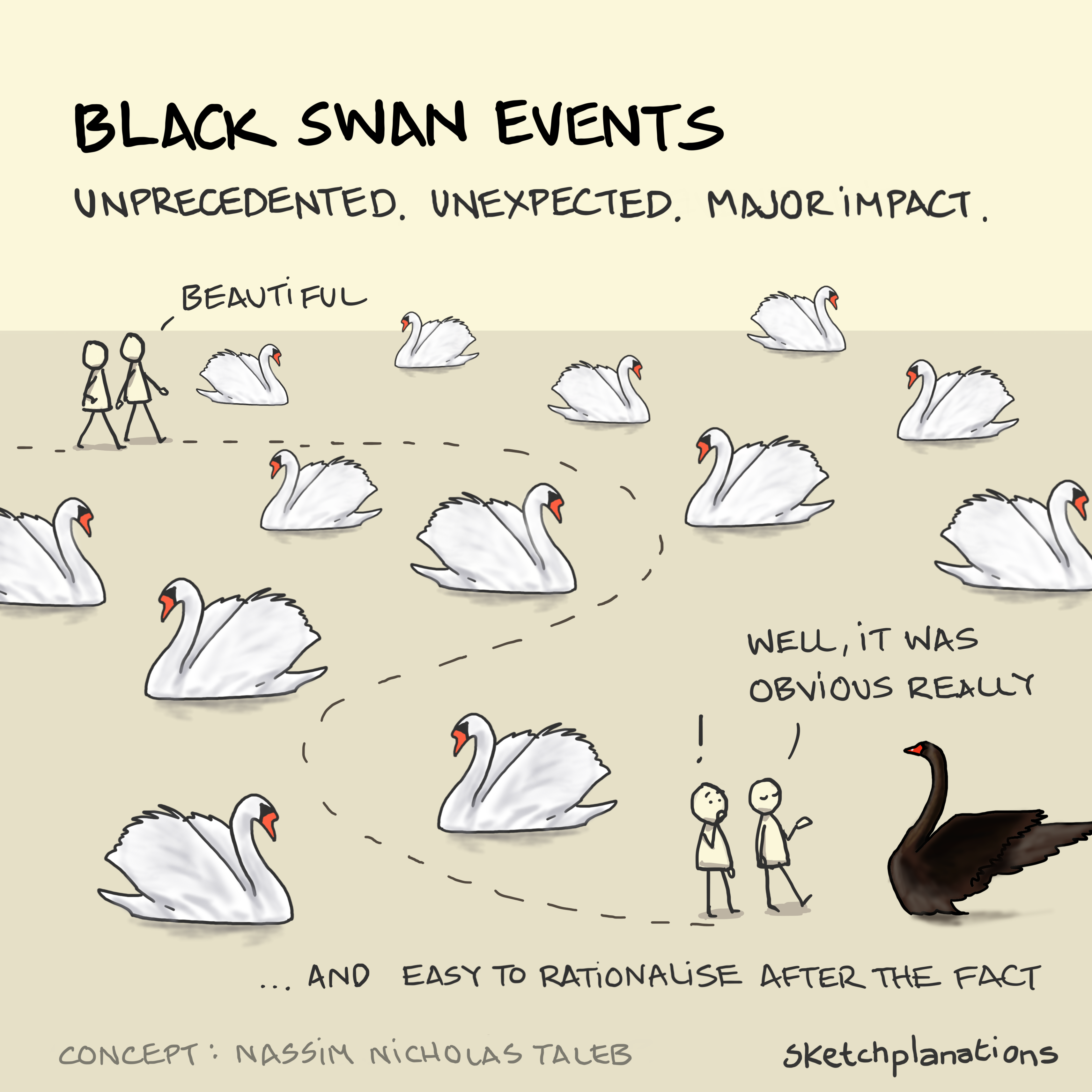

AI excels at optimization, but it cannot determine what to optimize for. What truly matters? What makes a good life? These aren’t just unsolved questions—they’re inherently unsolvable. AI can suggest but never determine. Its models will always be built on limited assumptions, vulnerable to shifts in what society values or an unexpected black swan event that overturns what we thought we knew.

AI and Human Intelligence: Different Forms of Cognition

AI and human intelligence are not two sides of the same coin—they are fundamentally different forms of cognition, suited for different realities. AI executes; humans interpret. These aren’t gaps AI will eventually close; they are fundamental divides between human and machine cognition:

- Moral reasoning in ambiguity – Not just making ethical decisions but questioning the frameworks we use to make them

- Genuine relationships – AI can simulate empathy, but human connection involves intuition and context that can’t be computationally encoded

- Creative conception – While AI can generate variations, only humans can truly imagine what could be

- System redefinition – AI follows incentives; only humans resist and redefine the systems that create them

This spirit of resistance has deep cultural implications. As Dr. Waku notes in the conversation, Western societies, shaped by thinkers like Walt Whitman, tend to “resist much, obey little.” This contrasts with traditionally more hierarchical societies that expect management from above. Such cultural differences may profoundly influence how different regions adapt to AI’s flattening of traditional power structures.

This ethos of resistance takes on new urgency as AI reshapes the world we know. In times when democratic principles face challenges, Whitman’s Leaves of Grass offers a warning that feels just as relevant today:

Resist much, obey little,

Once unquestioning obedience, once fully enslaved,

Once fully enslaved, no nation, state, city of this earth, ever afterward resumes its liberty.

In today’s context, as AI systems become more powerful, this capacity for principled resistance, for questioning and reshaping systems rather than simply optimizing within them, becomes even more crucial.

Why Human Oversight Is the Defining Factor

Even as AI improves through reinforcement learning, it’s always “learning to be a real boy” like Pinocchio—and will never fully arrive. Human oversight will always be necessary, not just for safety but because meaning itself is a human construct, not an objective fact AI can infer. Value systems aren’t static data points; they evolve with human experience and society.

Looking Forward

As AI automates labor and flattens organizational hierarchies, it forces a reckoning: What is left for human intelligence to define, govern, and challenge? Rather than competing with AI at optimization, we’ll focus on what makes us irreducibly human; we define what matters, question assumptions, and ensure technology serves evolving human values.

The future isn’t about AI independently navigating human values. It’s about humans continuously guiding AI through an ever-shifting landscape of meaning. Our responsibility isn’t simply to adapt to AI; it’s to actively shape its trajectory, ensuring that intelligence serves humanity, not the other way around.

This post was crafted with the assistance of AI tools, including ChatGPT and Claude, to enhance clarity and coherence because my degrees are in Statistics and Business, not English. Caroline also kept the piece from having an effusion of em-dashes. You’re welcome.

If you’d like to see how this discussion ties into our broader philosophy, start with our Mission, Vision, and Values to understand how AlignIQ defines human-centered intelligence. You can also explore our AI Upskilling Programs to learn how we help organizations navigate ethical AI in real-world contexts.

For more reflections on the balance between technology and human meaning, revisit How Uncanny Is My Valley?—our playful dive into AI storytelling—and if you’d like to collaborate or continue the conversation, reach out via our Contact Page.

Leave a Reply