Navigate AI.

ADVANCE WHAT MATTERS.

Category: AI Trends & Thought Leadership

-

Many people tend to assume AI thinks similarly to a human. While its outputs may be reminiscent of how a human expresses thoughts, AI is mostly predictive text and does not actually understand what it is doing.

-

“Overall, this panel was a demonstration of what happens when the people most affected by extractionism are allowed to define the rules, the pace, and the limits.”

-

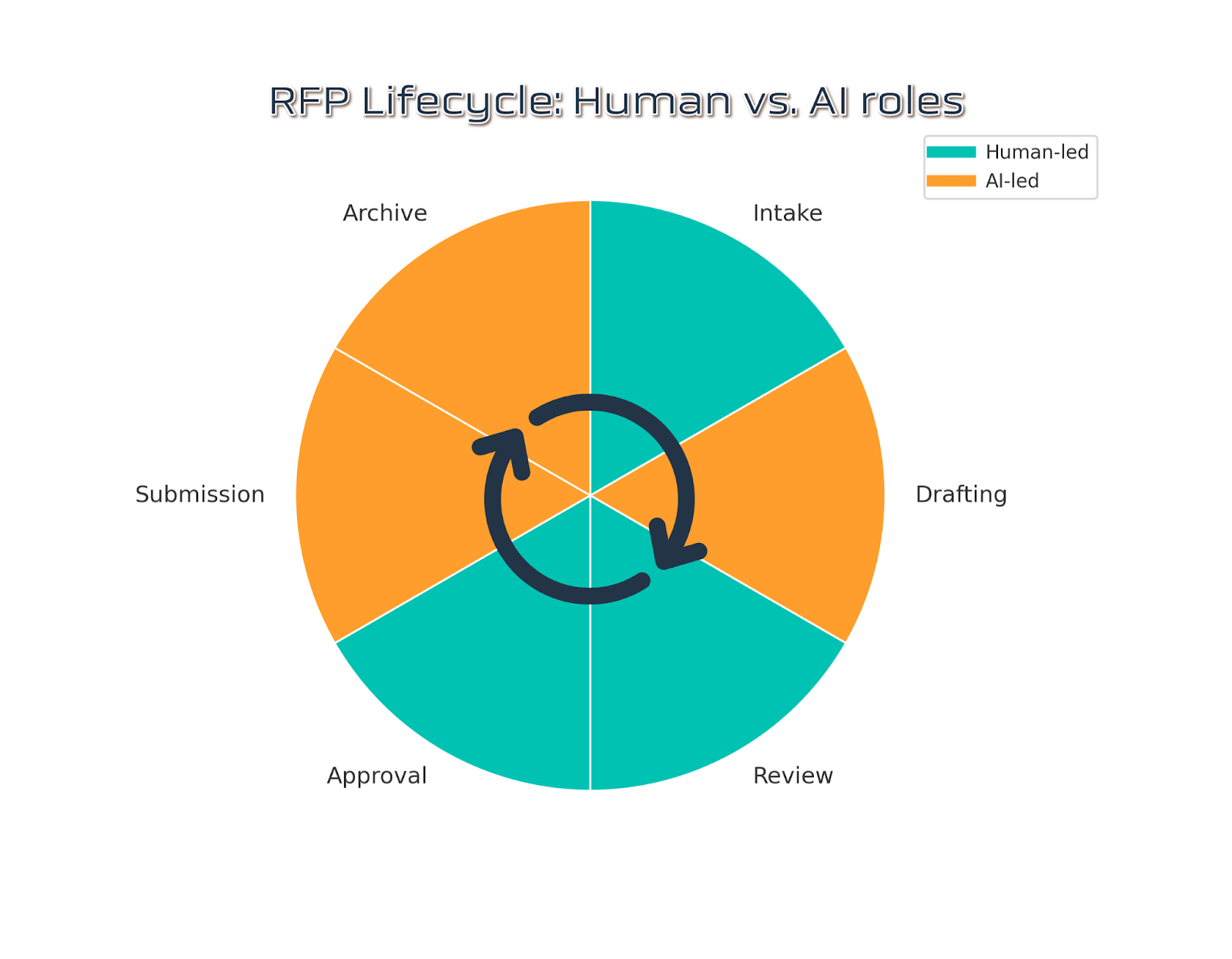

The organizations that will thrive won’t be those that resist these changes or those that thoughtlessly automate everything. They’ll be the ones that find the right balance between leveraging AI for efficiency while doubling down on the real human connections and mission-driven work that no algorithm can replicate.

-

There’s a temptation to turn everything into KPIs and metrics, but what we can measure is never exactly what we care about.

-

What does harmony look like when humans mediate it through machines?

-

Wanting to learn about and make the world better comes from our experience, but the work to do those things doesn’t always need a direct connection to that primary motivation, as we know all too well. Some of that work can be delegated to those who don’t share the vision, and this is where AI…

-

When working with AI, it’s helpful to think of it as an intern in its first week. In the capacity of scriptwriting, AI would be an intern in their first week with an uncanny unconsciousness of their resonance with the human experience.

-

The real opportunity lies in balancing automation with human judgment, so that technology not only accelerates work, but also deepens impact.

-

Without knowledge of how to optimize answers for ‘truth,’ they’re modeling what humans do–tell stories, hedge, prevaricate, lie, do bad math, and sometimes, eventually, suss out the truth.