Inside “Methods Matcher” and how nonprofits can balance automation with human judgment

When a cyclone hits or prices spike overnight, humanitarian field teams don’t have hours to sift through PDFs. Mercy Corps’ new “Methods Matcher” compresses years of know-how into quick, context-aware cited answers the teams can trust. Built with Cloudera and Tech To The Rescue, Methods Matcher is a practical pattern any mission-driven org can adapt with the right guardrails. Here, let’s unpack what Mercy Corps built, why it matters, and how other nonprofits can apply these lessons.

Mercy Corps is known for their model of hiring more than 90% of their aid staff locally. In 2024 alone, its programs reached ~38 million people. Independent watchdogs label the organization among the most disciplined with finances and transparency among nonprofits.

Mercy Corps is deeply embedded in humanitarian crises around the world, with 4,300 people working in 35+ countries–100+ historically. Sadly and unceremoniously, the gutting of a certain US governmental agency that, well, aids this type of organization led to elimination of ⅔ of its staff.

Luckily, they are embedded in something else: building AI products. With the pro-bono engineering help of Cloudera and the Tech for Good accelerator, they were able to build a retrieval augmented (RAG) AI assistant that creates instantaneous answers for crisis-level events. This is called Method Matcher, and it helps field workers with anything from creating real-time comms to stretching budgets in the midst of exploding countrywide inflation.

What is RAG? It’s a method of using LLMs that’s built by ingesting and indexing a company’s proprietary data (the aforementioned PDFs, among others), optimized for prompting and retrieval. Given the environs of Mercy Corps’ field workers, it succeeds partially because RAG works offline and partially because of its lightweight risks and the built in flagging of these risks.

Though the emerging evidence of impact is strong, admittedly no real impact metrics are available to demonstrate how positively the organization is impacting folks; they’re still piloting and developing ways of collecting some data qualitatively, which often tells a stronger story than the numbers. However, what they can measure is speed and efficiency–for a tech product that took less than two months to build, they were able to key out 82 different interventions in 44 early-adopting countries using not *gadgets* but information literacy. Monitoring, Evaluation, and Learning (MEL) reporting went from weeks of development to outlines in 15 minutes. Estimates (with all the aforementioned caveats) predict about 9.5 million lives saved since the AI product has been put into use.

Business Insider reports that the product between Mercy Corps and Cloudera is developing into something more resembling Agentic AI to build up automation and comms.

Not every nonprofit is as large as Mercy Corps though. Smaller institutions could have access to Agentic AI, which has the potential to infer more, but as it works with more data, it may work slower and have less functionality in areas with poor infrastructure (which can only be worsened in crisis situations). Even still, most small nonprofits out there don’t have Mercy Corps’s vast ratio of employees in the field. At the end of the day, most nonprofits don’t *need* in house AI projects, but can rather benefit from simple, standardized workflows, clear governance policies, and safe experiments with AI.

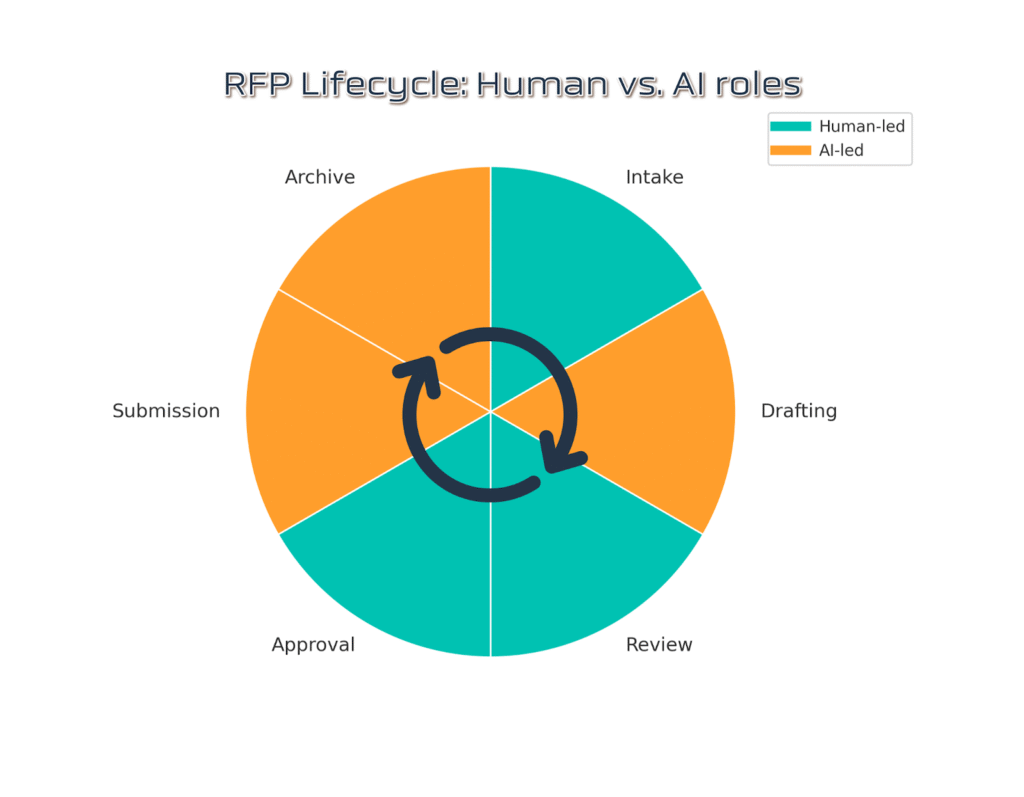

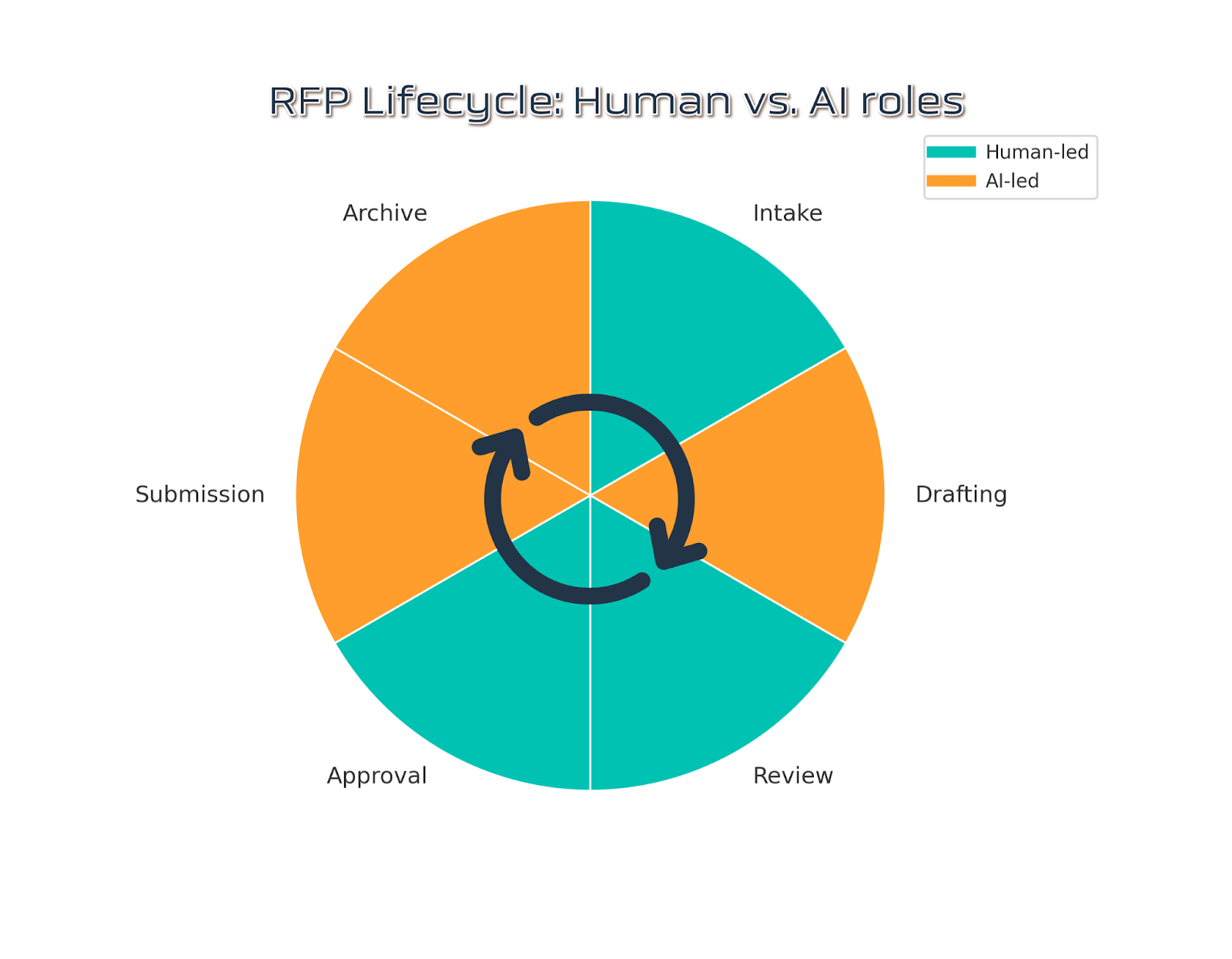

That’s where AlignIQ comes in! When we design Agentic AI-enabled proposal support and impact reporting, we usually structure the work around a few predictable categories. After doing some research into what AI vendor(s) the organization uses, we identify key roles and stakeholders, so it’s clear who’s responsible for each part of the process. Then we define the scope and deliverables: the technical build and the outcomes the organization expects to see. We also outline data security and privacy measures, since keeping information inside the organization’s systems is essential. Another piece is workflow integration, describing how the tool connects to existing processes and platforms rather than creating new silos. We add prompt engineering standards, so any AI-generated output supports proposal development directly and aligns with organizational needs. We map timelines and milestones, giving the team a clear picture of how the project will unfold and when results can be expected. Ultimately, impact reporting with our support can provide better outcomes long-term.

In many ways, experiments like Mercy Corps’ RAG show just how quickly the landscape is shifting. The lesson isn’t about a single tool or framework, but about how organizations choose to integrate new capabilities into their existing systems and values. The real opportunity lies in balancing automation with human judgment, so that technology not only accelerates work, but also deepens impact.

[In this post, we used AI for polish, not purpose.]

To learn about other orgs doing AI for Good (including our own, of course), check out our AI for Mission Driven Organizations tag.

In particular, we love this recent post on how AI is helping Bridges to Prosperity build infrastructure to accelerate African rural economies.

Want to know about how we can propose Agentic AI and a strong governance policy at your org? Schedule a sit-down with us.

Leave a Reply