Navigate AI Risks on a Guided Path

In our thirty-minute minicourses, we teach you to experiment with AI assistants safely and confidently at work. We focus on the latest safeguards to mitigate risks to your company’s data, reputation, and values. Learn practical guardrails for ChatGPT, Claude, Gemini, Copilot, and more, in workshops designed for purpose‑driven professionals. Responsible best practices, skill development, and compassion are designed into every session.

Team of 5+? See group options

Who is this for?

- Purpose‑driven professionals and teams.

- Individual contributors who want to use GenAI safely and ethically.

- Managers who need up-to-date guardrails before instituting a long policy.

- IT / Security / Legal / Ops coordinating adoption.

- People looking to grow their careers by upskilling their AI knowledge.

Who is this with?

- A purpose‑driven team!

- An advanced data science professional with ten years of experience in the health care space.

- A curriculum and teaching expert with twenty years of experience.

- Folks thrilled to work with professionals at different levels of AI literacy and skepticism.

- A team dedicated to seeing AI work for good.

How does it work?

- Various AI risks are taught one at a time in virtual thirty-minute sessions.

- Minicourses make learning more memorable and give you more options.

- Shorter course options are more affordable and easy to make a time commitment for than lengthy courses.

- Supervised activities and resources are built into the cost–unlike asynchronous coursework, you get personal attention.

Courses at a Glance

Free webinar

Want to get a clear understanding of what AlignIQ does for individuals and organizations? Join a free webinar before we start our paid courses. During this time, we’ll introduce what we see as the top nine risks to know about in order to be responsible AI users.

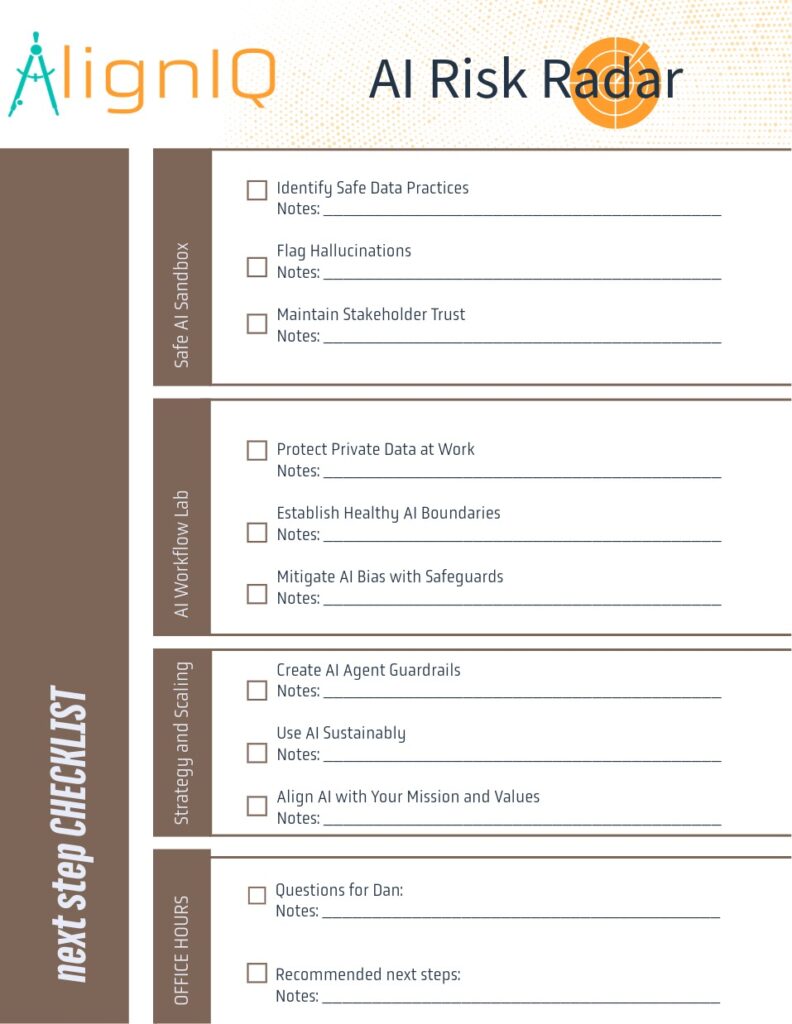

Tier One: Safe AI Sandbox (Available starting september, 2025)

Identify Safe Data Practices

This workshop introduces a three-tier system to distinguish secure AI use cases from ill-advised ones and illustrates the types of datasets that are most secure.

Flag

Hallucinations

With real world examples, learners will become discerning inspectors of AI outputs and learn basic prompt engineering that heads off hallucinations.

Maintain Stakeholder Trust

We’ll guide you to establish norms around AI co-creation and design rubrics for whether AI co-created content ought to be shared with clients.

Tier Two: AI workflow Lab (starting October, 2025)

Protect Private Data at Work

Benefit from our team’s extensive knowledge on masking data to maintain privacy from LLMs and unlock secrets to safe use.

Establish Healthy AI Boundaries

Whether AI novice or expert, you’ll come away with approaches to audit your or your team’s over-reliance on AI use.

Mitigate AI Bias with Safeguards

Explore why AI models default to discriminatory outputs and how to intervene with human-centric values.

Tier Three: the bridge to strategy and scaling (starting December, 2025):

Create AI Agent Guardrails

Learn how to safely supervise ChatGPT’s Agent Mode and similar AI agents, stop prompt injections, control connectors, and harden workflows.

Use AI Sustainably

We invite you to interrogate AI’s degrading effects on the environment and consider how your organization can take the lead in incentivizing greener, more responsible AI use.

Align AI with Your Mission and Values

In this course, you’ll learn to level up your prompt engineering to better establish, refine, and amplify your company’s vision and competitive advantage.

Still have questions?

We’re happy to help — whether you’re exploring group workshops, unsure which session fits, or want to talk custom solutions.

Outcomes you can count on

- A personalized game plan for immediate AI agent use.

- Exactly what never to paste into public models — and how to anonymize.

- Verification routines to cut hallucinations and misinformation.

- A concise Ethics & Mission‑Alignment checklist.

- Prompts to spot and correct bias & tone issues fast.

- Healthy habits to prevent over‑reliance / skill atrophy.

- Clarity on public vs. enterprise tools and when to use each.

- Managing-up tools to encourage responsible AI governance.

- Templates for team policies and individual commitments.

Flexible Workshop Pricing Plans

You can pick the plan that fits your pace, from a single most-needed course to a complete set. Replays included where recordings are enabled during limited access period.

Top Nine AI Risks in 30 Minutes

Live introduction to the core principles needed for safe experimentation.

- Accelerated introduction to risks our courses aim to mitigate

- Risk Radar Cheat-Sheet

- Interactive Q&A

Limited Time Pilot Pricing

$79 59

Individual Workshops

30 min live course, Safe AI Use Template, resources & replay.

- Choose From Safe AI Sandbox Courses: Privacy & Data Security Essentials · Hallucination · Ethics & Alignment

- Interactive Q&A

- Collaborative learning

- Hands‑on Practice

- Resource Toolkit

- One month access

MOST POPULAR

Limited Time Pilot Pricing

$199 149

Core Bundle

All three Tier One modules, Safe AI Use Handbook & replay.

- Access to all Safe AI Sandbox Courses: Privacy & Data Security Essentials · Hallucination · Ethics & Alignment

- Interactive Q&A

- Collaborative learning

- Hands‑on Practice

- Resource Toolkit

- LinkedIn badge

- One month access

Group Options

Teams of 5+ get discounted seats, a private Q&A, and optional tailoring to your data classifications, approved tools, and disclosure norms.

- 5+ seats: 10% off

- 25+ seats: 20% off

- Optional private cohort & office hours

- Policy & template tailoring available

FAQ

Do I need a paid AI plan?

No. We demonstrate with free/public tools and explain what might change with enterprise or team plans, including stronger data controls and additional features.

Will I earn a certificate from attending a minicourse?

We are offering LinkedIn badges to those who attend all courses within one tier and a certificate for those who complete a full workshop. See more information for requirements here.

Can I bundle three mini-courses from different tiers?

This option is not yet available, but might be coming soon! In order to earn a badge, your courses will need to be bundled by tier.

Can you tailor content to our tools and policies?

Yes. Group Options include discounted seats, private Q&A. Optional bespoke data classifications, approved tools, and disclosure norms can be negotiated. Please contact us at hello@aligniq.ai to help us build a quote for you.

Are sessions recorded?

Recordings are available when enabled. Replays are included with the course and the Core Bundle access passes.

How do you keep guidance current?

We continuously monitor model/provider updates and regulatory changes in the US and EU. We also refresh templates and examples on a rolling basis.

Will you offer refunds if I cannot attend a course?

We have a few options for our clients in the event that they cannot attend their scheduled event.

- A benefit of signing up for the course is one-month access to the course.

- Within one month of the course you sign up for, we will send you a recording of our session, provided it is available.

- In your one month access period, we will offer you a seat in another section of the same class. This term begins on the originally scheduled date of the first priced session you attend. Please see a breakdown of scheduled options on Eventbrite, or a simplified one here.

- If no more sections of your event are possible for you in the one month term, you may request a makeup class; however, since this becomes a highly personalized session scheduled specifically for your convenience, any makeup appointments will incur the session cost plus a modest 25% fee to accommodate the instructor’s dedicated time.

Please learn more about our course terms and conditions here.

Testimonial

AlignIQ’s initial presentation helped me think more about what areas of my non-profit, mission-driven organization’s work could benefit from AI solutions, and what some of the challenges might be, too. It’s exciting to think about how we could use our limited resources to focus more on achieving our mission rather than on tasks that AI could do for us!” – Karen S., Nonprofit Program Director