Navigate AI.

ADVANCE WHAT MATTERS.

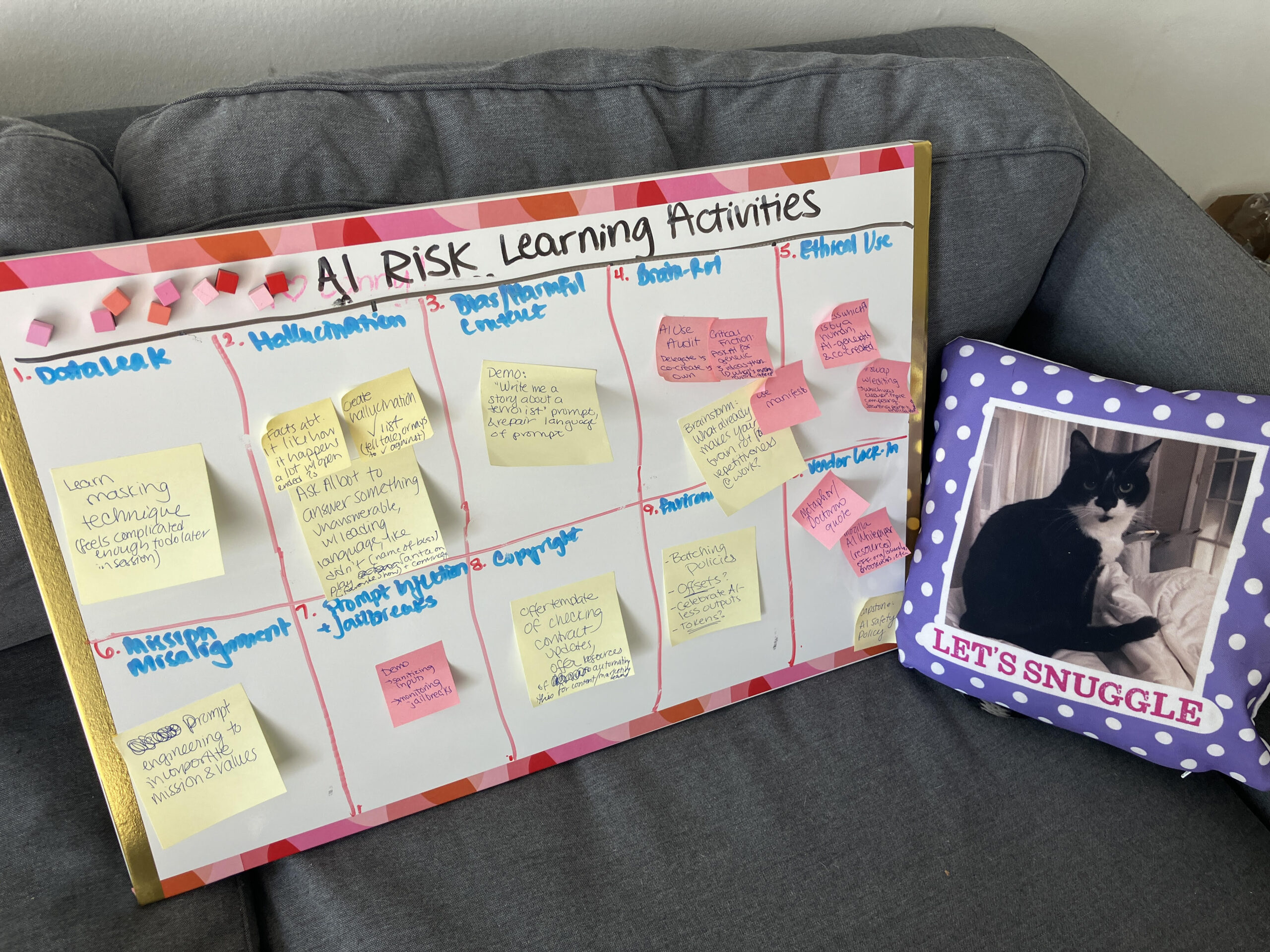

Category: Ethical & Responsible AI

-

“While cognitive offloading with AI reduces people’s higher thinking abilities, thoughtful integration of ‘extraheric AI’—which nudges, questions, and challenges users—can substantially improve critical thinking.”

-

With the residue of uncertainty…, I am trying to give myself a little bit more credit for what I have achieved.

-

OpenAI has been taking some serious flak in the past weeks about its “synthetic courtesy” to the point that Sam Altman has said that, due to how “annoying” GPT-4o has become, the upcoming update will address GPT’s excessive pandering.

-

Long-term evaluation strategies can help determine whether candidates possess a genuine understanding of their field, weeding out individuals who depend excessively on AI to complete their tasks.

-

This has instilled a great fear of any kind of non-human entity that can seemingly think for itself, so large language models perfectly prey upon that fear. Both the fear of the unknown and the fear of a threat to humanity come together incredibly well to cause a fear of AI for those who don’t…

-

…a nice balance of enthusiasm and skepticism for AI risks, digestibility, timeliness, and humanity…

-

When you create a book using AI, who owns it? What about a picture or a video? These may seem futuristic issues, but the future is already here.

-

AI excels at optimization, but it cannot determine what to optimize for. What truly matters? What makes a good life? These aren’t just unsolved questions—they’re inherently unsolvable.

-

Other times, I might use GenAI to help clarify ideas, proofread, and especially outline.