The basic nonprofit hiring checklist for a world swimming in algorithms.

by Caroline Stauss

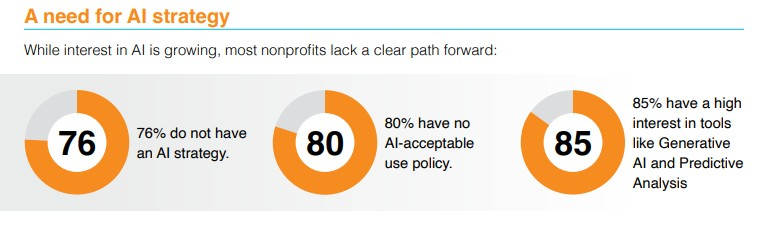

In one of my recent LinkedIn jaunts, I saw something that really surprised, nay, alarmed me. Something like 65% of managers or more in nonprofits have not issued any kind of directive around using AI. So shadow AI *necessarily* is a thing, and undoubtedly, irrevocably data on sensitive populations and subjects is now among vast training data.

[I unfortunately can’t cite this info, because LinkedIn makes it impossible with non-neurotypical/foresightless brains to actually find their posts again, so just take my word and some other person’s–dunno who’s–word for it. And maybe take a look at Tapp Network’s 2025 benchmark report or Non-Profit News, who reported on the matter post-election, pre-regnum, errrr -inauguration, so may contain old info in this age of constant AI updates.]

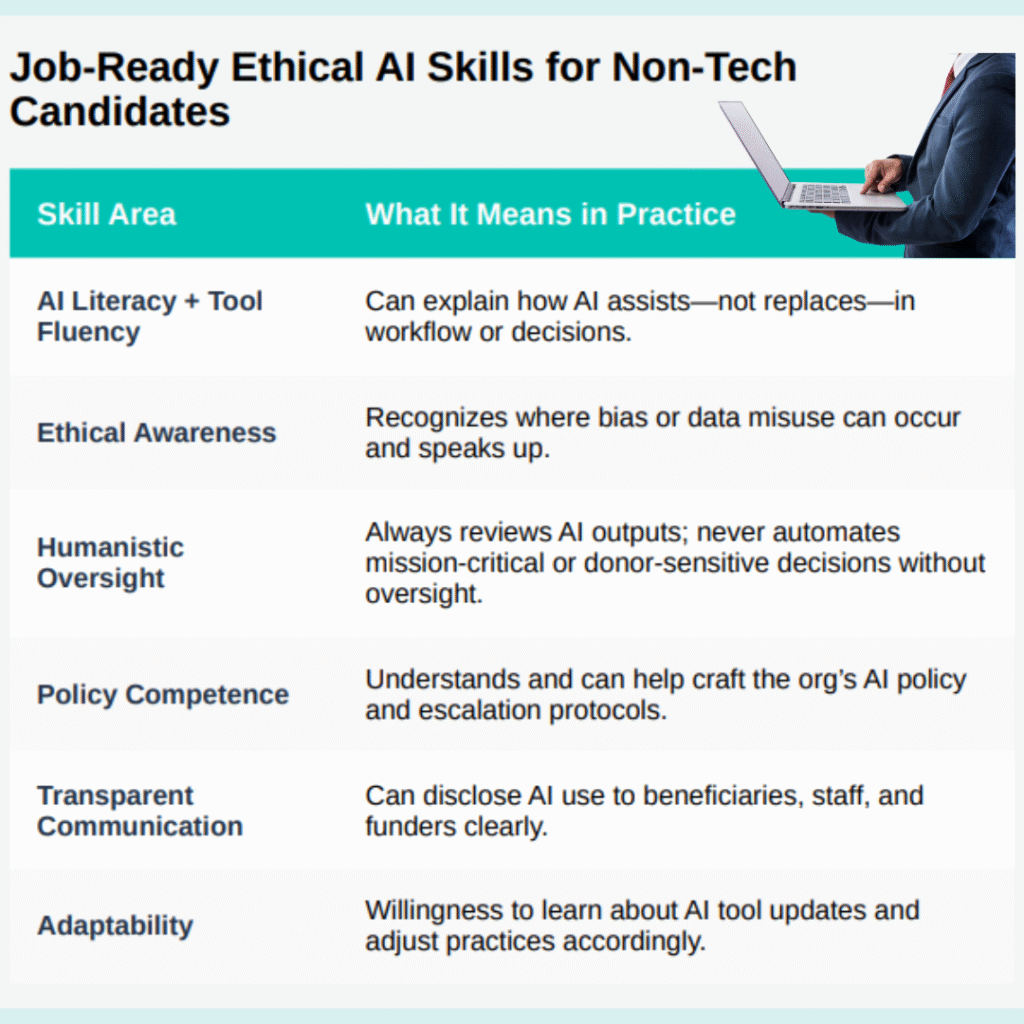

For the sake of these organizations (and leaders still wrapping their heads around AI), ethical AI skills are no longer a niche edge, but fundamental competencies.

What will they be hiring for? One thing that won’t change is that soft skills will still be prioritized. Even data scientists entering the sector have to pass a certain muster, according to The Australian. But, obviously, the LinkedIn Nonprofit Talent Report from this year shows that the reverse is also true: demand is rising for staff with hard tech skills. Simply put, tech-ready hires are now critical for mission delivery. Transparency, data privacy, human oversight, minimizing harm all wrapped up in one lovely job candidate and potential world-changer.

This signals a broader shift: employers now value candidates who understand how tools can and should be built responsibly; this also signals a new expectation for workers in the nonprofit sector: they must be able to tag tech skills into their passion for changing the world for the better.

This means for job seekers…

You need proof that you know how to work responsibly with AI. The easiest way to show this is through specific, lived examples. You can’t just say you’ve used ChatGPT–this might actually backfire, right? Instead, be specific and make note of how you accessed AI, but overrode it with your own intelligence, on top of leaning in to what you did for good. For instance: I drafted donor letters using ChatGPT, then manually edited them to preserve voice and accuracy. It was important to have a genuine voice to demonstrate gratitude for our community clean-water fundraiser.

If you’ve been involved in creating, reviewing, or following your organization’s AI policy, (most likely, this is informal, but lean into it!) make that visible on your résumé. It signals awareness of governance, not just tool use.

This means for hiring…

Set the tone by writing ethical AI expectations directly into job descriptions. That might look like asking for “experience using AI tools responsibly” or “familiarity with data privacy and bias issues.”

Interviews are also an opportunity to explore values in action. You must learn how a candidate can connect a nonprofit mission to their tech competency. Scenario questions are as always very valuable.

– How would you respond if AI flagged a donor as low-value, but you knew they’d been a loyal supporter?

-How do you check that an AI-assisted budget draft meets compliance?

-How would you ensure that your communications contain the most inclusive language, and how does that balance with AI-generation that you’d conduct in your role?

Finally, make it clear that learning continues after hire. Offering onboarding and training in AI ethics and responsible tool use, or even better, eliciting how they could contribute to further training, waves the flag that AI ethics is woven into your culture, not left up to chance.

The risks of ignoring the skills gap are palpable. Everything from data leaks, erosion of trust between organizations and donors, and the potential to leave out vulnerable populations can further erode a nonprofit’s capacity to ensure its survival in our, erm, intraregnum zeitgeist.

Hiring in AI’s next phase means sifting for moral judgement, not just tech-happy tool use. (This means, YES, HUMANS HAVE TO READ RESUMES. SORRY.) In nonprofits, mission alignment has to be what sets us apart. Ethical skills build trust with donors, communities, teams. If AI is the future, ethics are its integrity, and nonprofits have a responsibility to lead the way with their staffing choices.

Upskilling pathways we recommend:

ALWAYS START HERE: Informal conversations with your network on how to avoid shadow AI.

Hiring us, naturally.

Free guides and courses: The State of AI in Nonprofits, Fast Forward’s Policy Builder, Global Giving.

If you need some AI Basics, check out my summary of AI for Everyone, one of Coursera’s highest-enrolled courses.

If you need to reflect on what ought to be your ethical AI expectations at work, read From Remix to Action.

If you’re curious about how universities are shaping soon-to-enter-the-workforcers, read a post from one of those selfsame folks.

Leave a Reply