AI, don’t try to flout us

Always seeking out more stories about AI for good (including bookmarking the best resources and having a fit when Chrome’s update deletes them), I came across another UN AI for Good Summit 2025 presentation that struck my fancy, because it was all about decentering. Decentering has been on my mind lately: I attended DNNF’s UnConference to unearth the robust ecosystem of Netherlands’ entrepreneurs “on the margins,” not to mention *waves hands around at all the oppressive tactics being deployed against the global majority by the few with wealth and power.*

But maybe much like the DNNF conference felt somewhat performative (it was sponsored by Rabobank, but at least they knew their audience enough to season their food), AI’s ecosystem of philanthropes (like us) can collapse into good vibes and glossy dashboards. What if we listened to the voices in ethical AI that have the most to lose?

Perhaps the country most deeply immersed in the power of DEI these days is Canada. Their second largest telcom company, TELUS, even has an Indigenous Advisory Council (IAC) to demonstrate their commitment to Truth and Reconciliation. I am crying inside imagining even the legal standing of something similar in a large US corporation, but that’s neither here nor there.

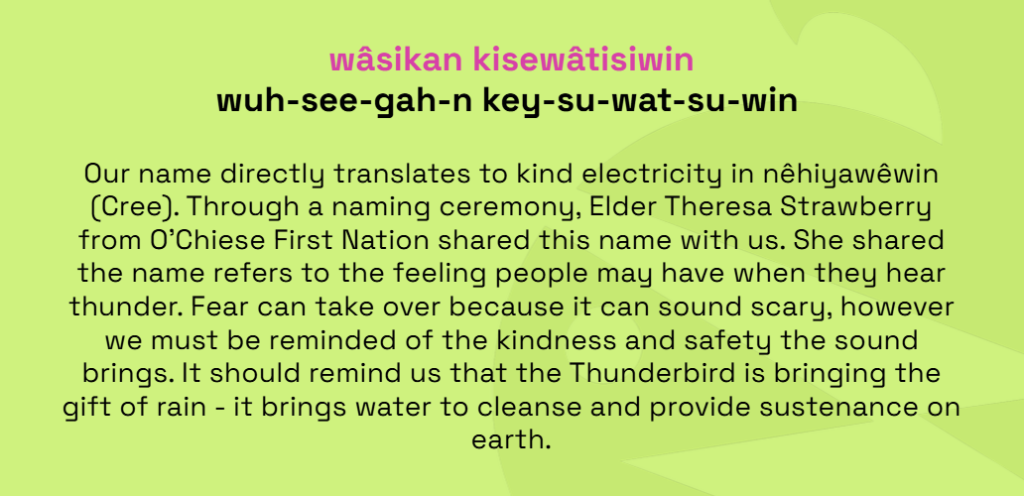

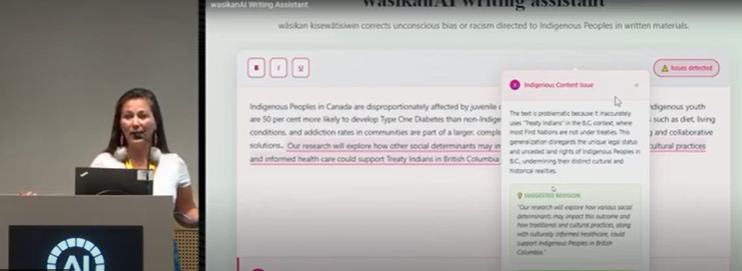

Key on the panel was Shani Gwin, CEO of wâsikan kisewâtisiwin, an AI app being developed to combat bias and misinformation about indigenous peoples when they are input into the chatbot, suggesting alternatives to problematic language, explaining why this language is harmful, and surfacing historical context. Some chatbots out here actually chasing accountability as opposed to fluency.

Overall, this panel was a demonstration of what happens when the people most affected by extractionism are allowed to define the rules, the pace, and the limits.

Based on their lengthy and lively and controversial conversation at the summit, it doesn’t seem like they’re just putting this committee on a pedestal to trot out at UN summits. They’re throwing the full weight of truth in asserting that Western quantitative data is not the only legitimate epistemology. Western tools (like AI) might “scientifically” discover something that is well mapped out by indigenous knowledge. AI can only see what it counts.

Why are AI tools built? Because humans are exhausted. But who’s benefiting from it? The ones who haven’t really been building anything or even properly advocating for themselves–-the corporate cogs. Community empowerment and challenging conventional wisdom are bedrocks of indigenous knowledge. AI reclaims mental space for those who are less mired in the need for it.

AI artwork? Not about art, not even about intellectual property. It’s about sovereignty. It’s about livelihood. And there is no symbolism without human creation. It’s very clear that AI does not have a spirit. If the training data appropriates art to promulgate probabilistic art, this is not very different from when the whitefaces appropriated Native Land. This issue, when flagged by the IAC, was actually taken seriously. AI should create “nothing about us without us.”

Like the indigenous advocates invited by TELUS into purple teams to test the cracks in the AI system (and finding them early and often), we need to be emboldened to break things. In the interests of reconciliation, this work must yield indemnity. AI ethics without compensation is extraction with better branding.

At AlignIQ, one of the most important things we teach is incorporating when we shouldn’t use AI into our anchor workflows. I’ve also learned that we need to be more insistent in promoting AI tools like wâsikan kisewâtisiwin, Justice.AI, and Thaura for the times we want to do right by the true stewards of our world.

TELUS’s IAC advocates for reconciliation more structurally, not simply as a caveat in a slide deck. And this is the way that AI ethics should be: the constraints of the system, point blank period. Just as the elders are keys to indigenous “governance,” ethical tenets need to be baked into everything the world derives from whatever good *can* come from AI. So let’s keep challenging ourselves!

What would it take to treat AI deployment as a relationship you have to maintain, not a system you install?

Read more of our blogs on how AI is being used for good:

[In this post, we used AI for polish, not purpose.]

Leave a Reply